In part one of this blog series, we covered some of the basic definitions and differences between Business Continuity vs. Disaster Recovery vs. High Availability and talked about Active/Active and the varying degrees of Active/Standby for protecting your systems. Now I want to focus in on how you determine what the most appropriate level of protection is for your business applications and the supporting data.

One of the most common things I hear is that we need to protect the entire EDW Platform at the same level. In my experience, all data is NOT equal. Some data is required for critical processes but much of it is just “nice to have.” The first order of priority in any Business Continuity and DR Planning exercise is to classify your systems and data assets based on which business processes they support and assure that all your assets are protected appropriately. So how do you identify your most critical assets?

Before we dive in, I want to make something clear. Most of the work I have been doing over the last decade has focused in on protecting customer data in a relational database, but disaster recovery and business continuity planning is NOT just about protecting data at rest in a database. It MUST also include, at a minimum, some of the following:

The bottom line is that your goal is to be able to continue business operations following a disaster event. Keeping a good backup of just your data will do no good if users, permissions, and software releases on your DR Platform are woefully out of date.

Back to identifying your most critical assets! To determine this, I will usually ask questions like:

-

“What is the impact to your business if they are unable to access their application for one hour? One day? One week?”.

-

“Is the answer to the above question dependent on time of day? Day of week? Week of month?”

The first answer I usually get back is that any outage is totally unacceptable. At this point I ask “Why? What is it that needs to get done in that timeframe and what is the cost to the business if it is not done?”

At this point we need to discuss what we mean by “cost to the business.” A loss of capability/functionality does not always have a direct monetary impact. There are many other things we need to work into the calculation. Some examples are:

-

Reputation/Loyalty loss – of a customer, a supplier, a business partner?

-

Financial Performance loss – stock price, credit rating, revenue recognition?

-

Labor/Productivity loss – employee downtime, contractor commitments?

-

Other losses – regulatory or legal obligations, travel expenses, equipment rental?

The bottom line here is that the “Cost of an Outage” can be quite complex and monetary loss is not always the biggest concern of the business.

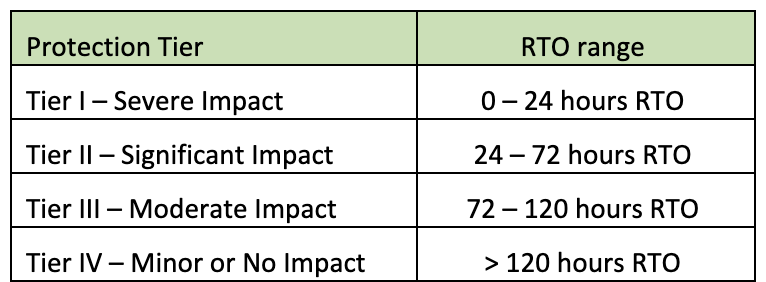

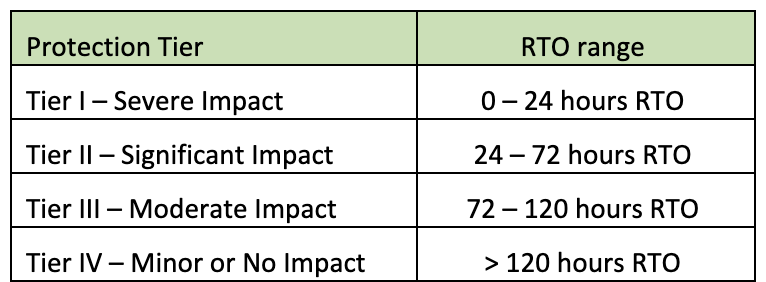

When calculating the cost of an outage, it is important that we break it down by “application” or more importantly, by business function. I have found that “application” can be a very nebulous term that means different things to different folks. Therefore, I like to focus more on business functions like Marketing Campaigns, Customer Relationship Management, Risk Management, Financial Reporting, etc. Generally, we need to identify the things that your business users do or provide regularly that bring value to the company, and then trying to rationalize the “costs” if those things were unavailable. This process is called a “Business Impact Analysis.” To make this process easier, I would recommend coming up with a classification system based on how quickly the business functions need to be back online after an outage -- this is called the Recovery Time Objective (RTO). The following is an example of classifications used by some customers:

While the RTO is critical, there is another important metric that must also be considered as part of the plan. That would be the state of the data once the functionality is returned. This is called the Recovery Point Objective (RPO). Many definitions of RPO indicate that it is “the amount of data loss that is acceptable.” This definition most likely has its roots in the Backup and Restore world where your last backup was the only restore point to recover to. When RTO and RPO are very low, being able to recover from a backup becomes difficult if not impossible, so I like to expand this definition with business users and ask the question, “What is your tolerance for data freshness when an application is returned to service?” Basically, this means that we are not accepting any “Data Loss.” On this point, there is one more clarification that needs to be made to make sure everybody is on the same page; is the RPO target relative to the point of failure, or is it relative to the point of availability after an outage? Let me clarify this with an example:

We have an ETL process that loads data every 15 minutes into a relational database. This data is queried every 10 – 15 minutes to keep a dashboard of KPIs updated. We have a stated RTO of one hour on this process and an RPO of 0. So, if we experience an outage on the relational database platform at 1:00pm, then we must be back up and operational by 2:00pm, but with an RPO of 0 does it mean that the state of the data at 2pm is “as of the time of the failure at 1pm?”, or “current at the time of recovery, 2pm?”

Some folks will assume it is as of the time of the failure, but most business folks will assume it is as of the time the system is back online. So, I strongly suggest that however you decide to interpret Recovery Point Objective, that everybody is crystal clear on exactly what will be provided when a failed system is recovered.

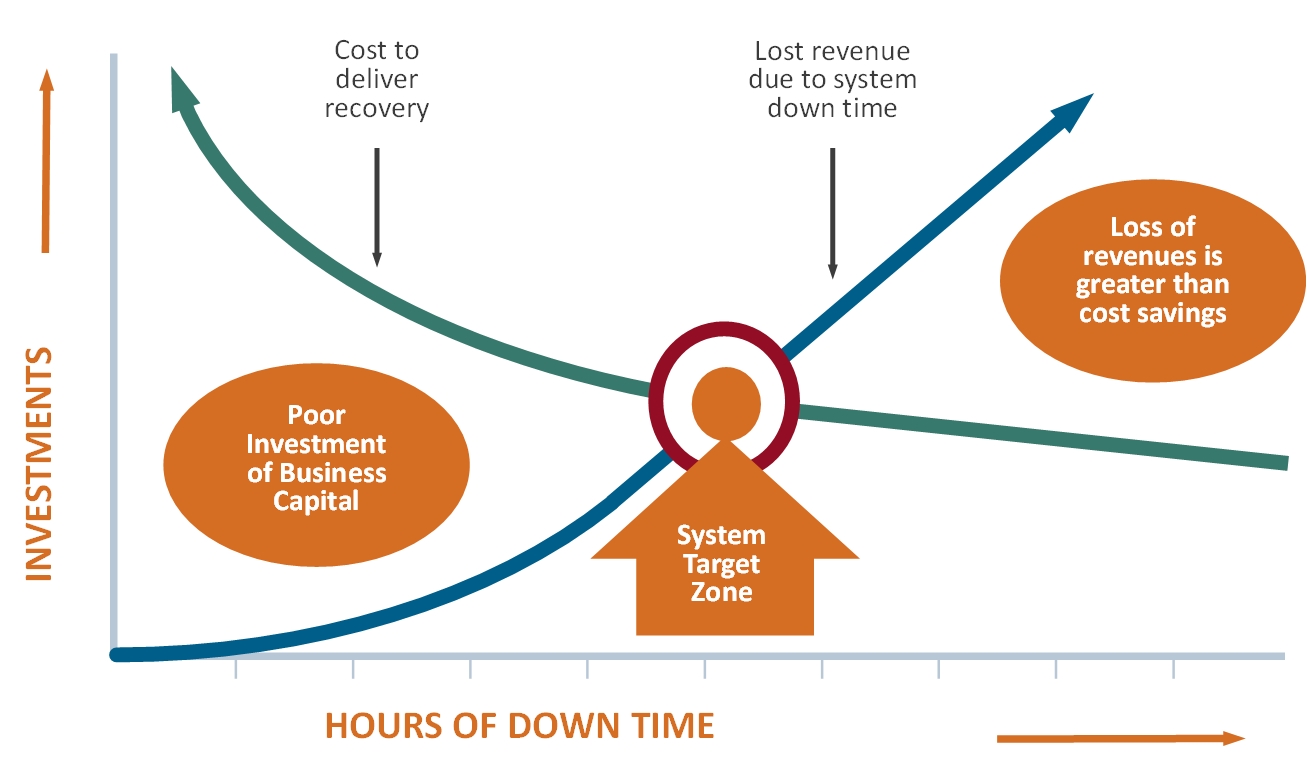

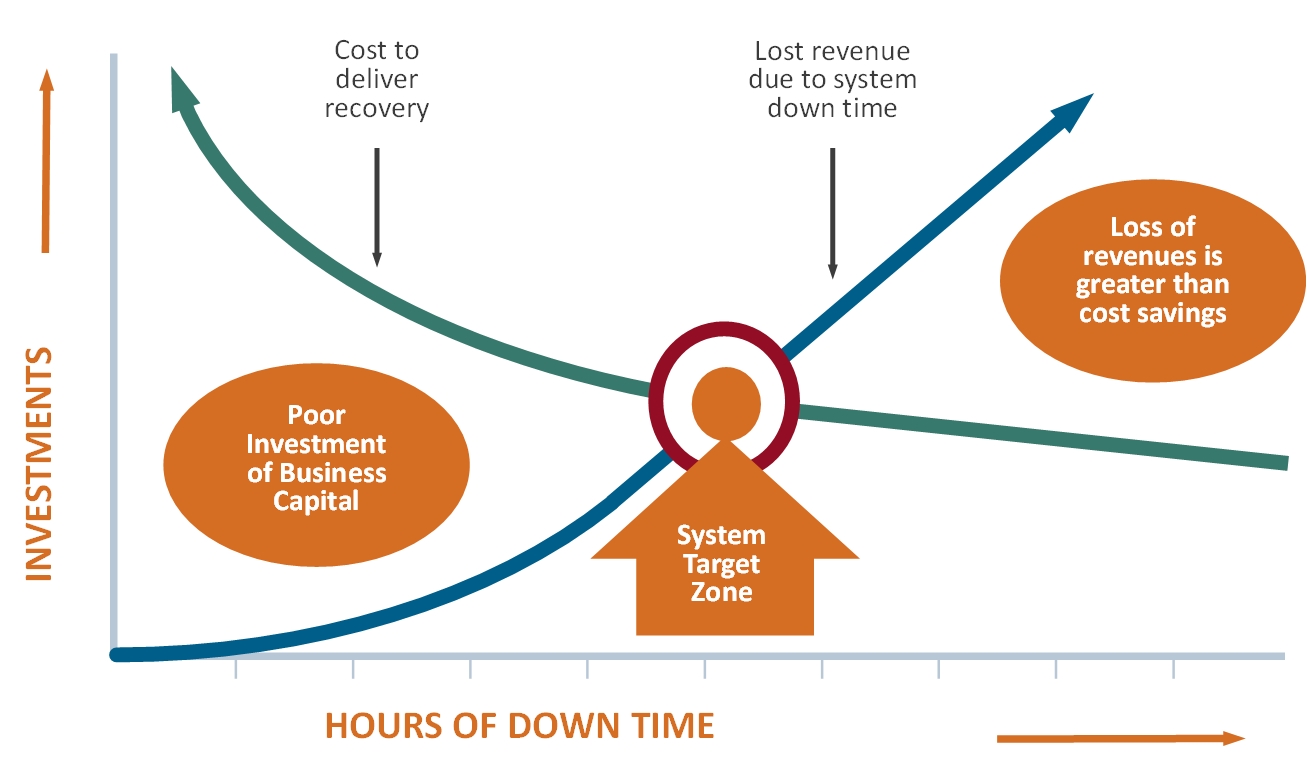

In summary, the key to a successful Business Continuity Plan and the associated Disaster Recovery Plans is to really understand the value that it is bringing to the business and weighing that against the cost to implement and maintain the appropriate level of protection. As depicted in the diagram below, if you deliver a high cost solution that reduces or eliminates any downtime, but the benefit to the business is low, then that is a poor investment. Conversely, if you implement a low-cost solution that provides limited protection for a critical process and many hours of downtime results in significant loss to the business, then the cost savings is completely overshadowed by the loss should an outage occur.

Finding the “Target Zone” for each of your applications is critical to maximizing your investment while also allowing you to sleep easy at night knowing that should the unthinkable happen, you have a plan and you are protected!

Finding the “Target Zone” for each of your applications is critical to maximizing your investment while also allowing you to sleep easy at night knowing that should the unthinkable happen, you have a plan and you are protected!

Finding the “Target Zone” for each of your applications is critical to maximizing your investment while also allowing you to sleep easy at night knowing that should the unthinkable happen, you have a plan and you are protected!

Finding the “Target Zone” for each of your applications is critical to maximizing your investment while also allowing you to sleep easy at night knowing that should the unthinkable happen, you have a plan and you are protected!